|

|

|

|

NEW DEVELOPMENT!

We call it SmartTrim. Instead of clobbering the entire virtual memory subsystem, it selectively targets background RAM hogs. Instead of having an atrocious implementation, as do too many competitors, it does things right.

Our plan with this new technology is to let the user choose specific processes and set thresholds for those processes. We also offer an 'AI' mode, where SmartTrim makes it's own decisions.

Check it out in Process Lasso. SmartTrim is disabled by default because we still do not believe RAM optimization is necssary for most users. However, we accept the fact that some advanced users can use it to good effect.

And, remember, Bitsum is a bundle-free company. Our installers are safe and clean. You can safely try our software without risk of infesting your PC with crud from install bundles.

Virtual Memory is an abstraction layer. It allows for many cool things, including for processes to allocate more memory than there is RAM. Pages of memory not actively in use can be paged out to disk (the page file). When a process references memory not already in RAM, then it is "paged in" on-demand. This is called a hard page fault.

The virtual memory manager of the Operating System is responsible for making optimal use of your RAM - that is, keeping it (RAM) as FULL of useful stuff as possible. You want it FULL because it is the fastest storage medium on your PC. Any memory called 'free' by the old Windows Task Manager is available for use a file/disk cache. In Vista+, SuperFetch was added, allowing a smart cache of frequently accessed data that could be pre-fetched when the OS was booting up, and maintained and updated as it ran.

In order to make sure there is sufficient free RAM for a disk and file cache, or to provide for the needs of other applications, the virtual memory manager tries to free up unused RAM by paging out memory that has not been referenced recently. Makes sense, eh? The details of how quickly it does this are dependent on the virtual memory manager itself. It varies from OS to OS.

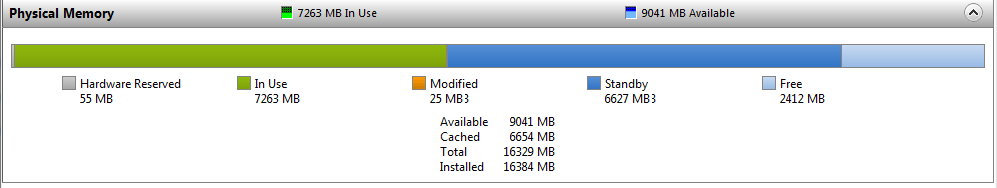

Some people believe that it is not aggressive enough in paging out unreferenced virtual memory. That *might* be true in some cases, as the user knows what he or she is going to do with their PC next. Therefore, forcing the page out of one or more processes may be desired in some cases. HOWEVER, it *only* matters if your RAM is otherwise 100% filled with both process allocated memory and disk/file cache information. You can check this in Vista+ with the new Resource Monitor. It will show how your RAM is actually utilized, and if any is *truly* free (no cache in it). If this figure is large, then you have plenty of RAM already.. more than the OS has even had a chance to bother filling with cached data. If it is 0, or near 0, then you could *potentially* gain a *marginal* amount of optimization by forcing a page out of one or more running processes.

Paging out on or more processes in its entirety has downsides. First, it causes some percent of the pages to immediately be recovered, as they are referenced by running threads in the paged out applications. This incurs a re-load penalty *after* the initial penalty of paging out. Of course, the penalty is likely small, as secondary caches probably caught some of this. However, it does free up more RAM for use by new applications, or for a file/disk cache.

So, does software that pages out one or more processes work for you? Maybe. You tell me. I've always been pessimistic about the possibility of any substantial aid, if any at all - BUT, I'm open to hearing reports. Can a user more accurately predict their behavior than an algorithm? Does it matter enough to warrant their time? These are questions dependent on MANY factors.

Many applications today page themselves out when they are minimized, or when parts of them go inactive. They do this to reduce their effective RAM footprint. Therefore, for many processes, additional paging out makes little difference. For most all processes, over the long-term, the same amount of RAM is probably consumed regardless of whether it is forcefully paged out or not. After all, over time, the pages it uses are paged in, and the pages it doesn't use are paged out.

There are some myths out there though, or perhaps just terminology problems.

First, RAM stands for Random Access Memory. Any location of the RAM can be read or written equally fast, there is no USE for it to be contiguous with virtual memory, nor does any head seek need to be done (because it's not an HDD). There is no pre-fetching like on HDDs, it just doesn't work like that. If you mapped it into an English alphabet, pages A and Z could be read or written as fast as pages A and B.

Defragmentation of RAM is a myth - or, more specifically, any performance concerns from fragmentation of RAM is a myth. The only time it ever mattered if RAM was contiguous was back before we had virtual memory. Back then, you wanted it contiguous to make optimal use of your RAM. Since it wasn't 'mapped' into an abstracted layer called virtual memory, applications had to find a 'hole' big enough for them to fit in. Those were the old days though.

There is a such thing as heap fragmentation, but that is an issue for software developers. This is where virtual memory is allocated so much and in such obtuse sizes that all that mapping around induces a lot of overhead.

If you are out of virtual memory address space on say a 32-bit process, there is nothing an external utility can do. The pointers to the allocated virtual memory are all stored internally in the application, and no third party tool has any way to reallocate or redirect them. So, if you have a 32-bit game that needs more memory, no external utility can help.

Any claims about 'freeing unused DLLs' or 'freeing leftover stuff' is false. Since each process has its own completely unique virtual address space, you can imagine how easy it is for the OS to make sure that memory is completely and utterly freed. ALL virtual memory used by a process is completely freed when that process terminates. Memory leaks of various types can NOT be fixed by external utilities. These must be fixed by the application developer. Only the application knows which memory it intends to reference again. Thus, that is the definition of a memory leak - memory accidentally left allocated that the application has no intention of ever accessing again.

Sadly, virtual memory is complex enough to where there are many misunderstandings. Know the facts.

Virtual Memory management at a low level is *much* more complex than I've laid out here. I mean, you would be shocked at just how sophisticated the management of it is. That is why it is best left alone, in most all cases. Besides, these days most applications themselves will page their own inactive memory out before the virtual memory manager even has a chance to do it, so that their perceived memory footprint is lessened. Yes, it is a trick many developers use to hide their real virtual memory utilization.

Summing it up

Other rules to live by